In this blog post, we explore the foundational technologies that power AI/ML infrastructure—from training and inference clusters to the specialized networking required to keep GPU-driven workloads running smoothly. While we touch on some technical topics like RoCEv2, RDMA, and congestion control mechanisms (PFC, ECN), the goal is to provide a broad understanding for readers who are curious about how AI systems are built and scaled. You don’t need to be a deep technical expert to follow along, but a little networking or systems background will help you appreciate the complexities involved.

Infiniband vs RoCE: Evolving High-Performance Networking

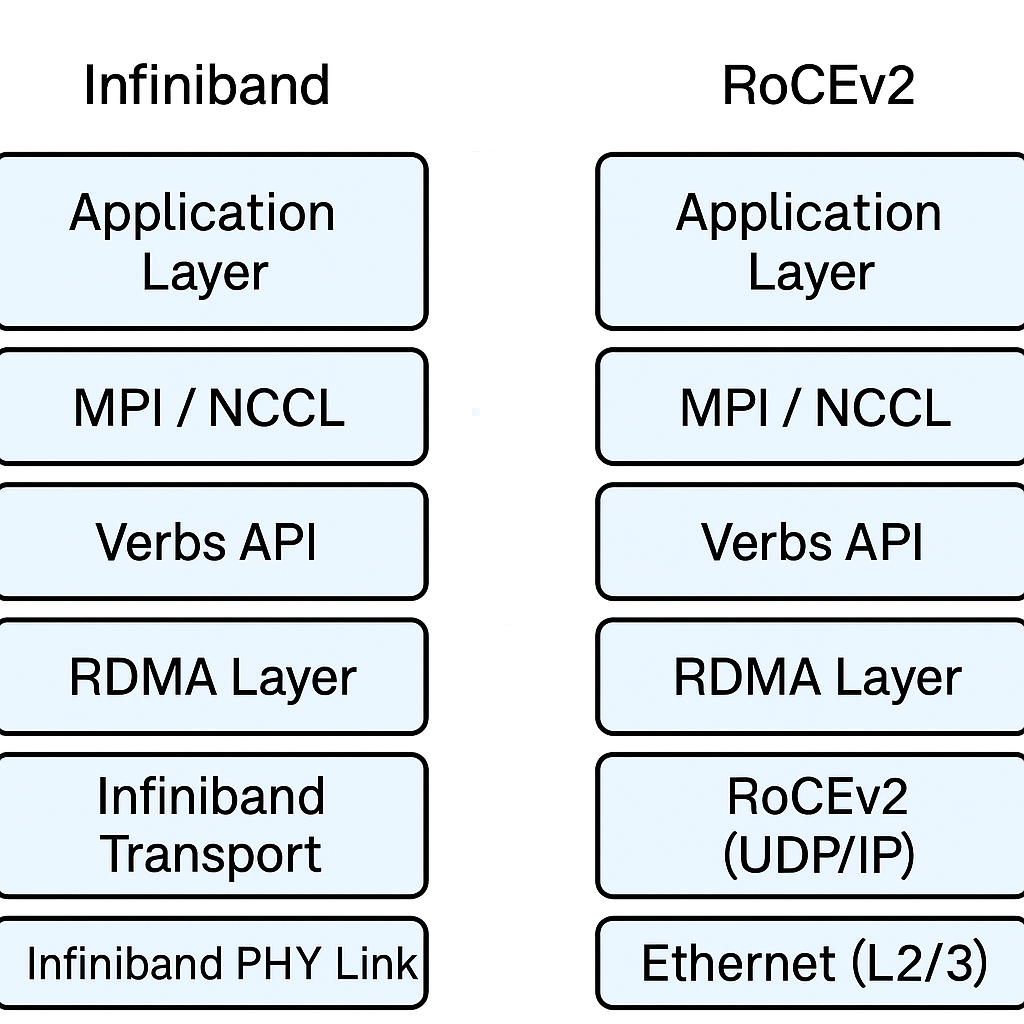

High-performance computing (HPC), AI workloads, and hyperscale data centers have traditionally relied on Infiniband to meet the stringent demands of low latency, high throughput, and reliability. Infiniband’s tight integration with RDMA and native support for congestion control and lossless behavior has made it a natural fit.

But the ecosystem is changing — Ethernet-based RDMA, especially RoCEv2 (RDMA over Converged Ethernet), is gaining traction. Hardware and software improvements are narrowing the performance gap, offering new flexibility for data centers built on commodity Ethernet.

How Things Are Today: The Infiniband Dominance

Infiniband offers a purpose-built stack for low-latency, zero-copy communication. It offloads most of the data path from the CPU to the NIC (HCA), allowing direct memory access between nodes via RDMA.

Key strengths:

- End-to-end congestion control using ECN (Explicit Congestion Notification)

- Lossless communication via credit-based flow control

- Low jitter and consistent latency

- Mature integration with MPI, NCCL for distributed training

It’s a gold standard for tightly-coupled applications such as large-scale scientific simulations or training massive deep learning models.

Infiniband vs RoCEv2 stack comparison — illustrating how RoCEv2 layers RDMA on top of UDP/IP to make it routable and Ethernet-compatible

Infiniband vs RoCEv2 stack comparison — illustrating how RoCEv2 layers RDMA on top of UDP/IP to make it routable and Ethernet-compatible

The Shift: Why RoCEv2 is Gaining Ground

RoCEv2 wraps RDMA in a UDP/IP envelope, making it routable across L3 boundaries and compatible with existing Ethernet infrastructure. With the right tuning, RoCEv2 can approach the lossless behavior of Infiniband.

What makes RoCEv2 appealing:

- Leverages commodity Ethernet hardware and cabling

- Reduces cost compared to Infiniband fabrics

- Allows scalable routing over IP networks

- Supported by modern NICs (NVIDIA, Intel, Broadcom)

Enabling Lossless Ethernet for RoCE

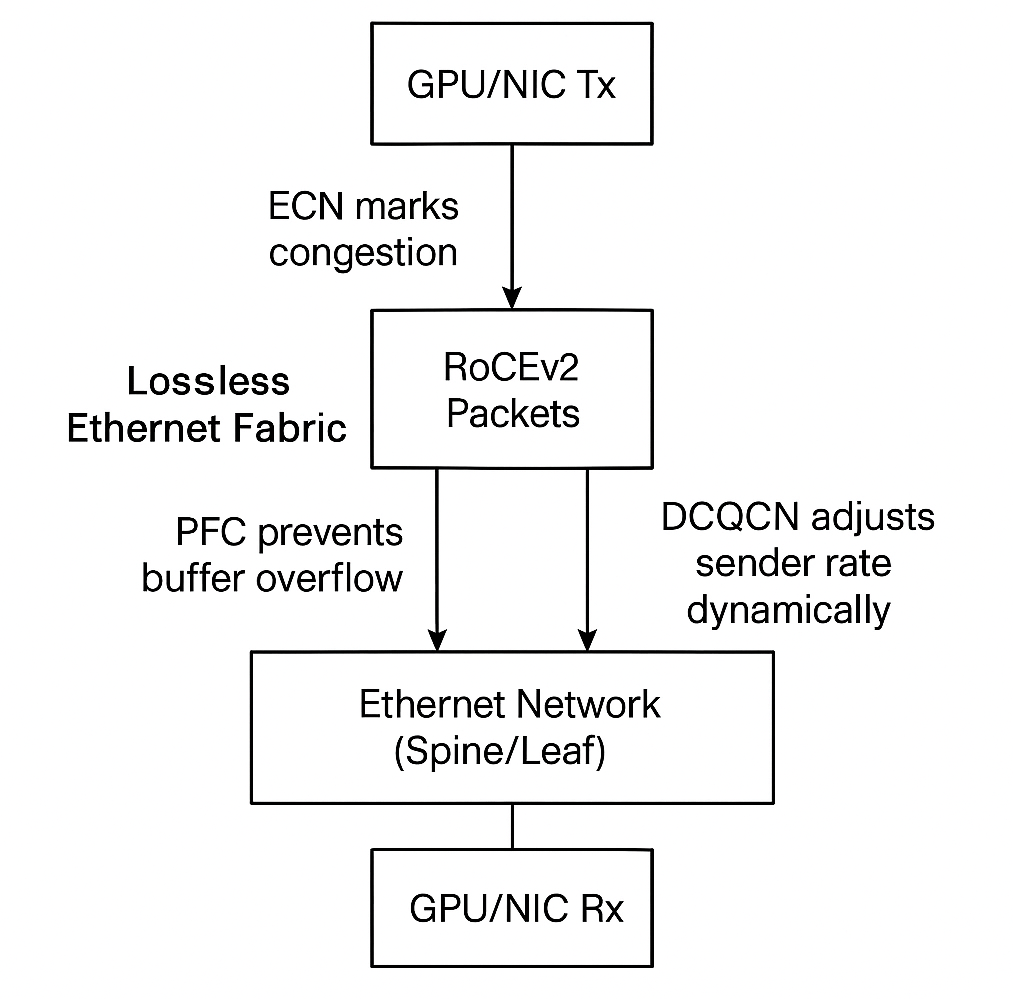

To mimic Infiniband’s lossless design, RoCE networks need careful tuning.

Key techniques:

- PFC (Priority Flow Control): Prevents packet loss for RDMA traffic by pausing specific classes of traffic.

- DCQCN (Data Center Quantized Congestion Notification): An ECN-based mechanism to throttle RDMA traffic during congestion.

- QoS Mapping: Ensures RDMA flows are prioritized and isolated from regular traffic.

Without these, RoCE suffers from performance hits due to retransmissions, as Ethernet is inherently best-effort.

Congestion Control mechanisms(PFC,ECN) in RoCEv2 stack in lossless Ethernet fabric

Congestion Control mechanisms(PFC,ECN) in RoCEv2 stack in lossless Ethernet fabric

Challenges with RoCEv2

RoCEv2 isn’t a drop-in replacement for Infiniband. Its Ethernet roots introduce complexity:

- Packet loss sensitivity: Retransmission is application-level, not handled by the NIC.

- Complex configuration: Tuning PFC and DCQCN correctly across all switches is non-trivial.

- Vendor inconsistency: Support for ECN, traffic classes, and hardware offload varies.

These challenges can make RoCE feel fragile if not well architected.

Looking Ahead: Convergence and Coexistence

The future likely holds hybrid deployments:

- Infiniband will continue to serve traditional HPC where ultra-low latency and predictability are critical.

- RoCEv2 is becoming the default in cloud-native and AI-focused data centers, especially where scaling and cost-efficiency matter more.

Enablers for this transition:

- SmartNICs with programmable congestion control

- Switches with ECN support and per-priority queuing

- Middleware like Libfabric and UCX that abstract the RDMA backend

Conclusion

Infiniband remains unmatched for deterministic, low-latency workloads, but RoCEv2 is becoming a strong contender. With Ethernet-based networks already widespread, RoCEv2 offers an opportunity to extend RDMA benefits across scalable, cost-effective, and routable infrastructures.

Proper QoS and lossless configuration are essential, but once in place, RoCEv2 can rival Infiniband in performance for many applications.

Understanding both Infiniband and RoCEv2 lets you design better fabrics for the next generation of AI, HPC, and data-intensive workloads.

Additional Resources

If you’re interested in diving deeper into the technical nuances of AI/ML infrastructure and network design, the following resources provide excellent insights and guidance:

- Cisco Data Center Networking Solutions: Addressing the Challenges of AI/ML Infrastructure - An overview of how Cisco addresses the complex requirements of AI/ML workloads with purpose-built networking solutions.

- Cisco Validated Design for Data Center Networking Blueprint for AI/ML Applications – A detailed, field-tested architecture tailored for scalable and high-performance AI/ML environments.

- Cisco Data Center Networking Blueprint for AI/ML Applications – An in-depth blueprint that provides practical design guidance for deploying AI/ML workloads in modern data centers.

- For those looking to explore this topic in greater depth, Toni Pasanen’s blog offers a wealth of insights drawn from extensive hands-on experience and research. It’s a valuable resource for anyone serious about understanding AI networking infrastructure.